Synopsis

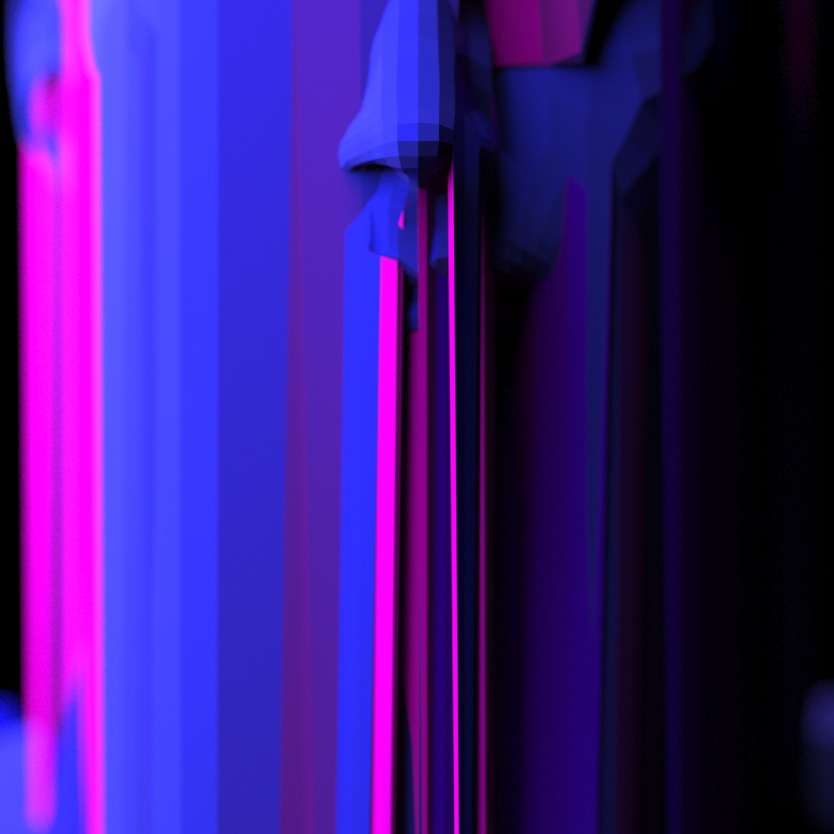

The machine sits confidently with its quiet hum still. On the glowing monitor, 'you are 21% human'.

This project was an investigation into the intangible realm of machine vision, in contemplation of the identities of humans in the age of machine learning and AI.

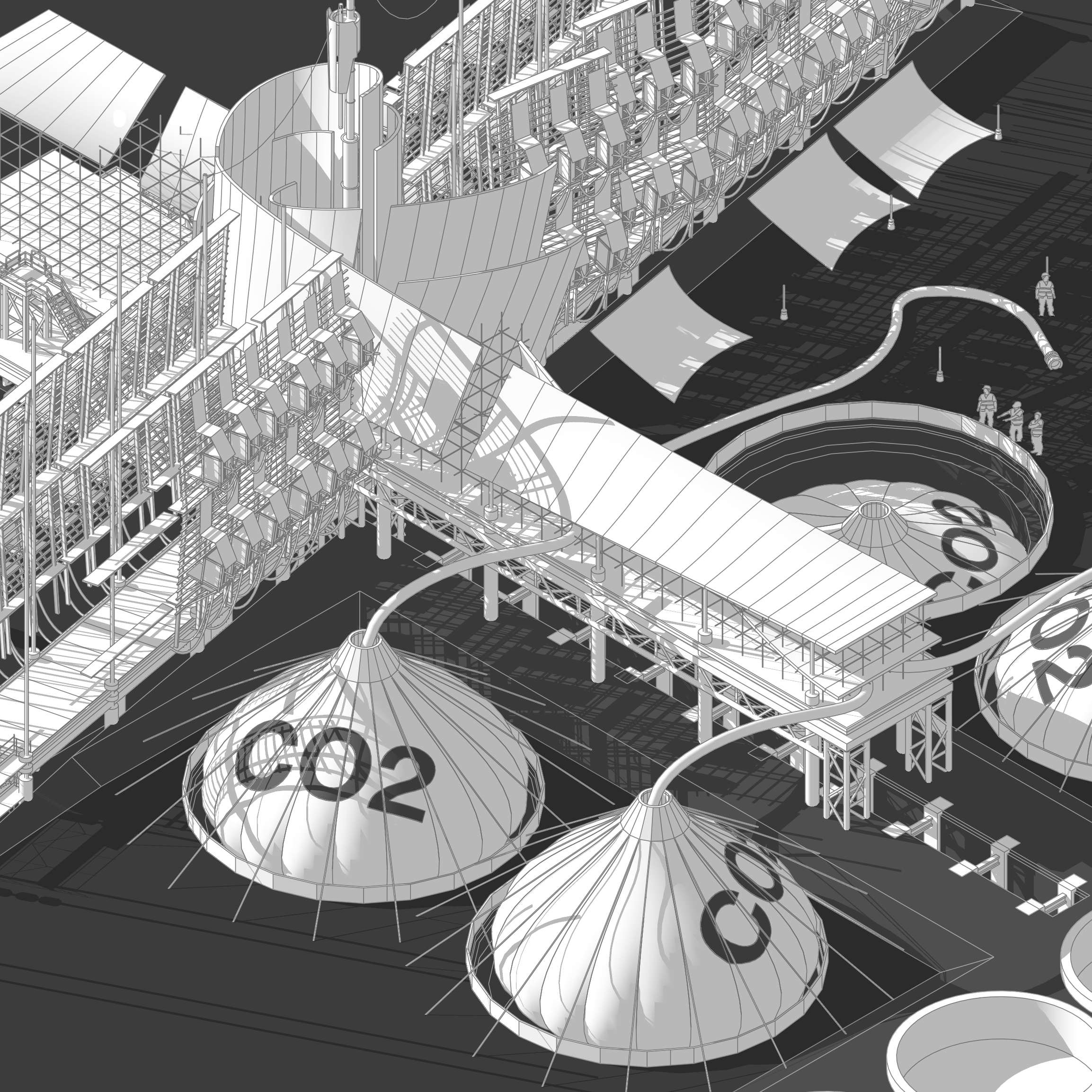

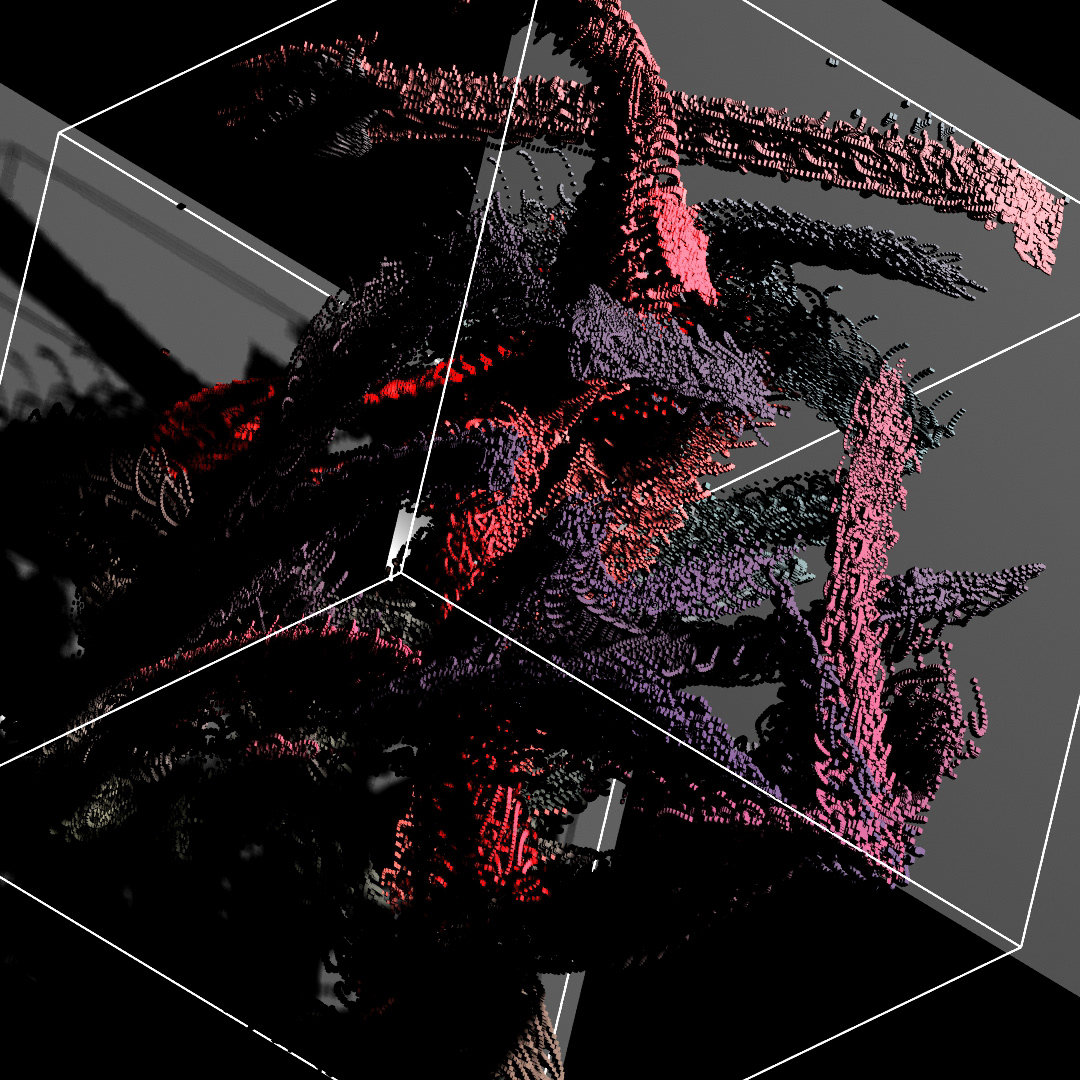

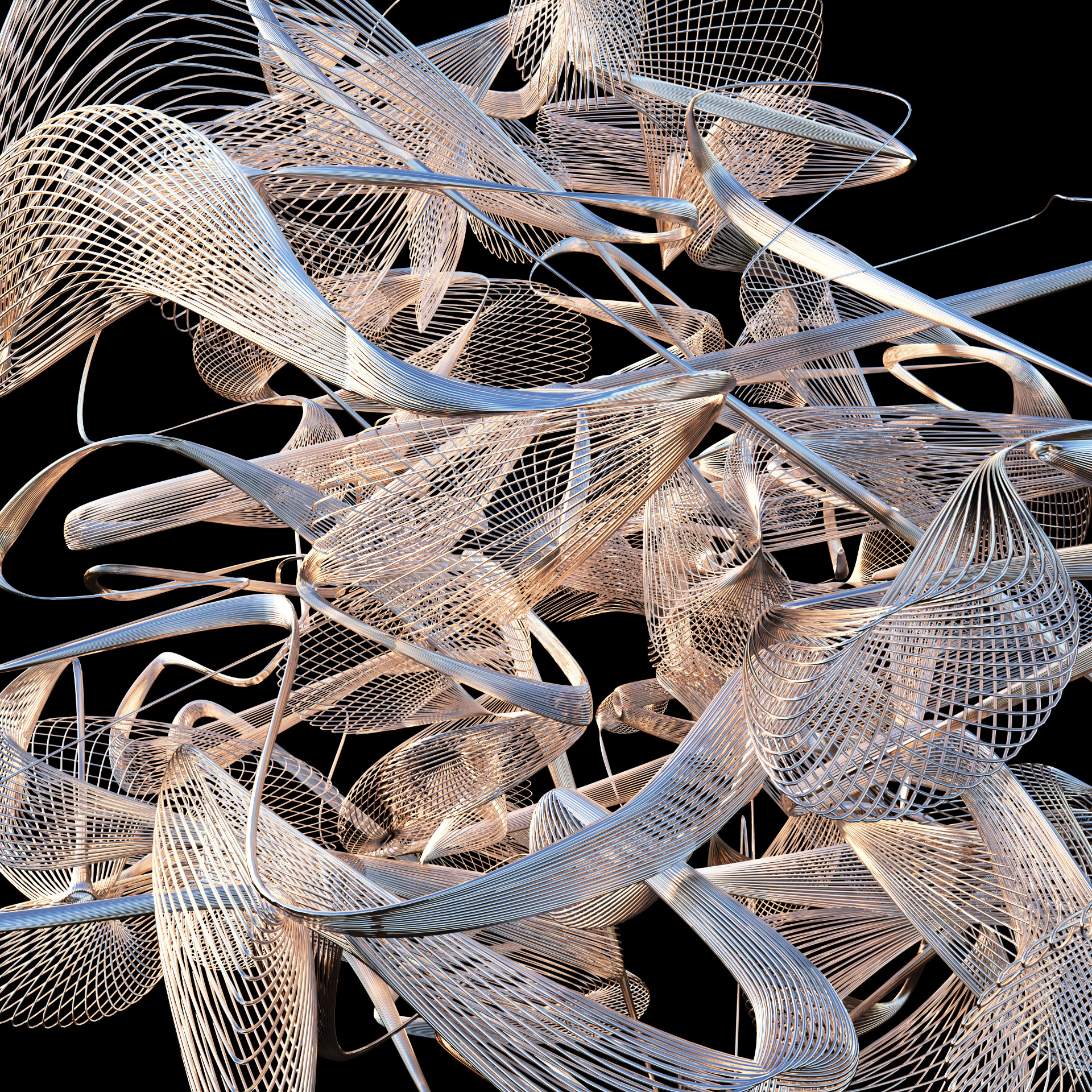

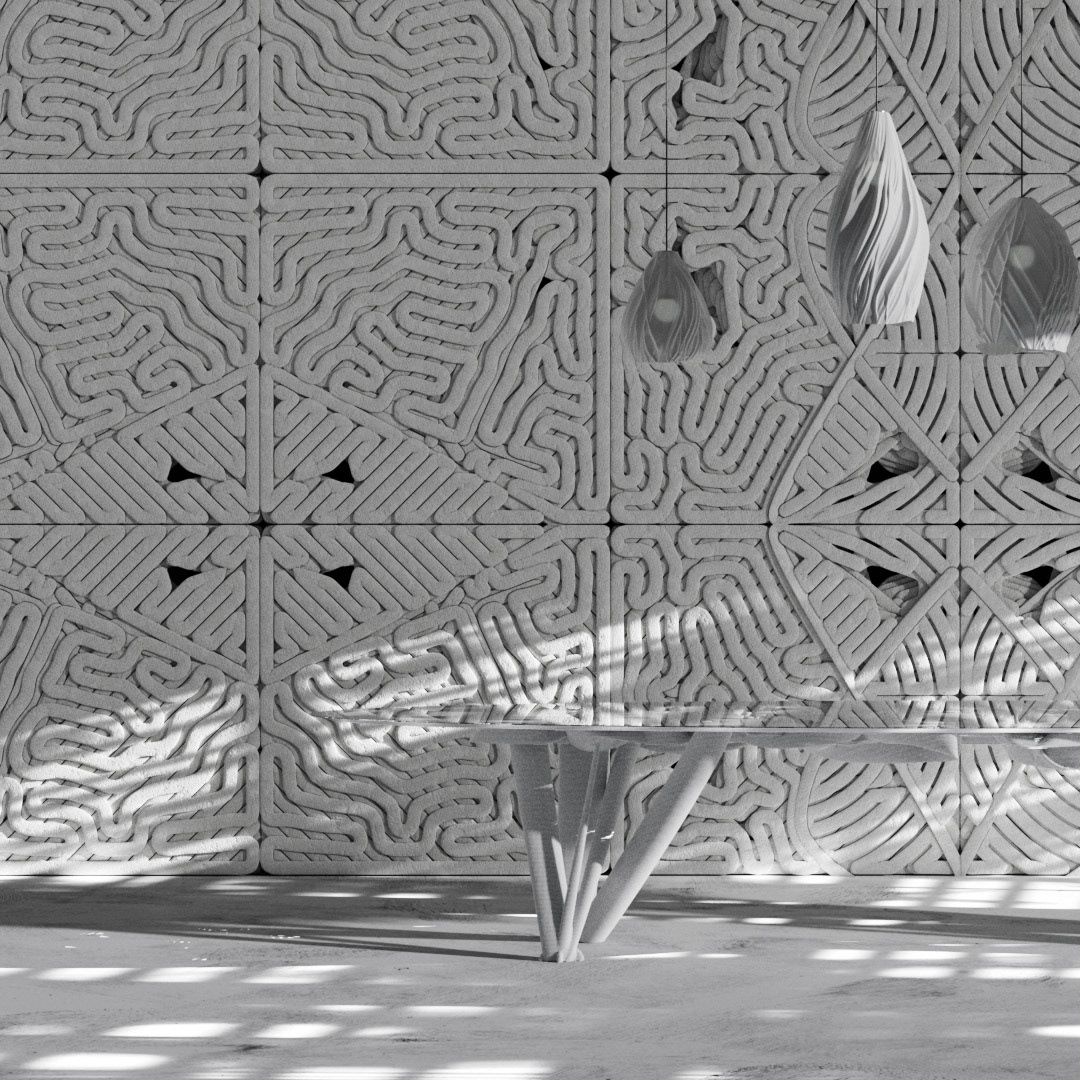

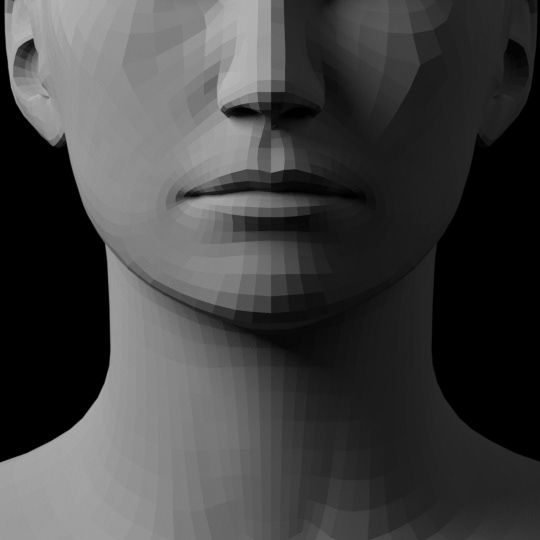

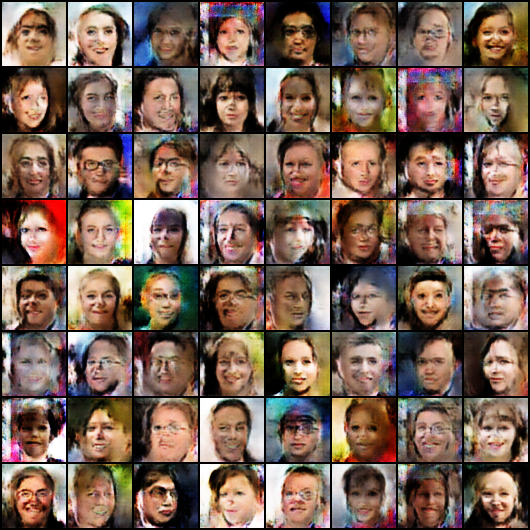

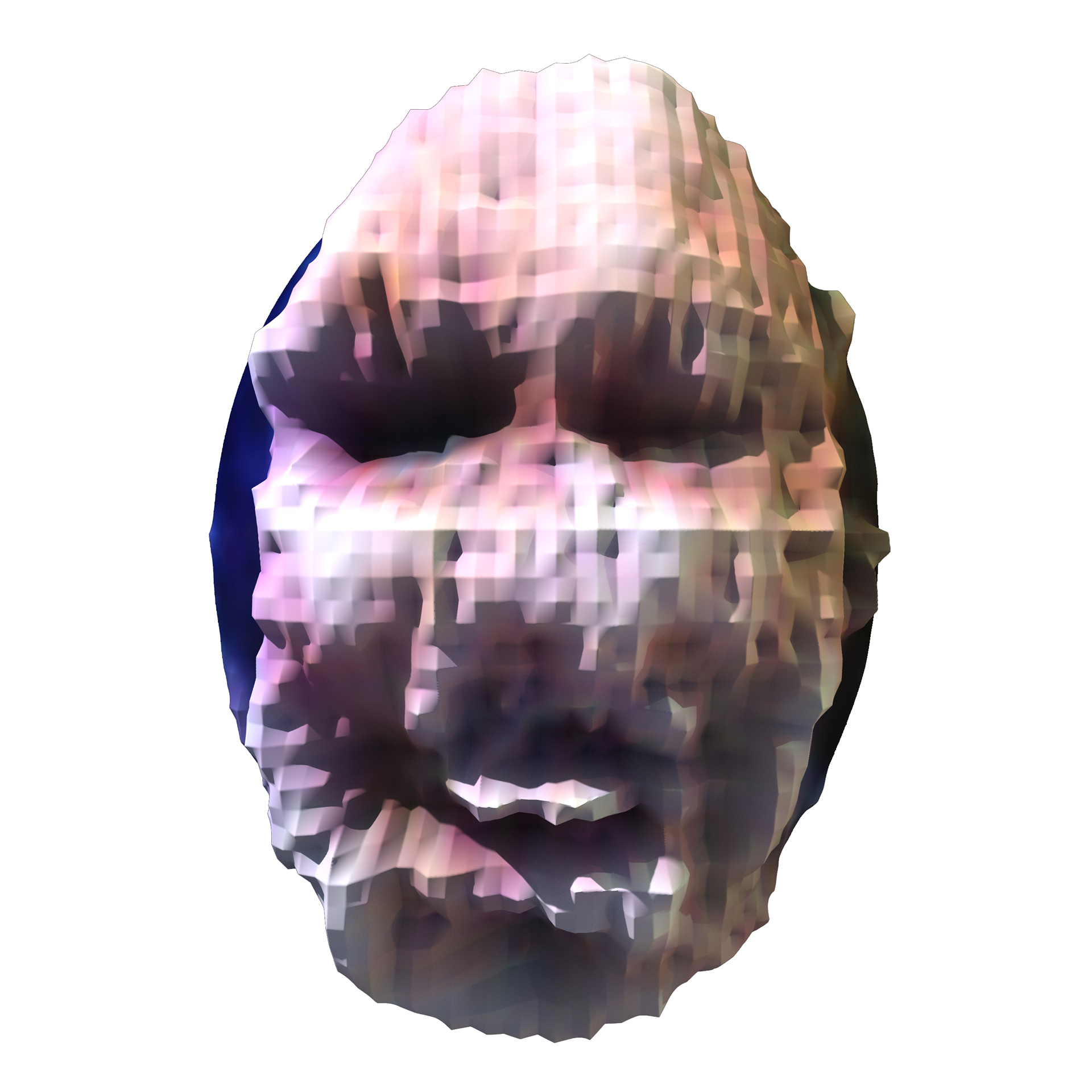

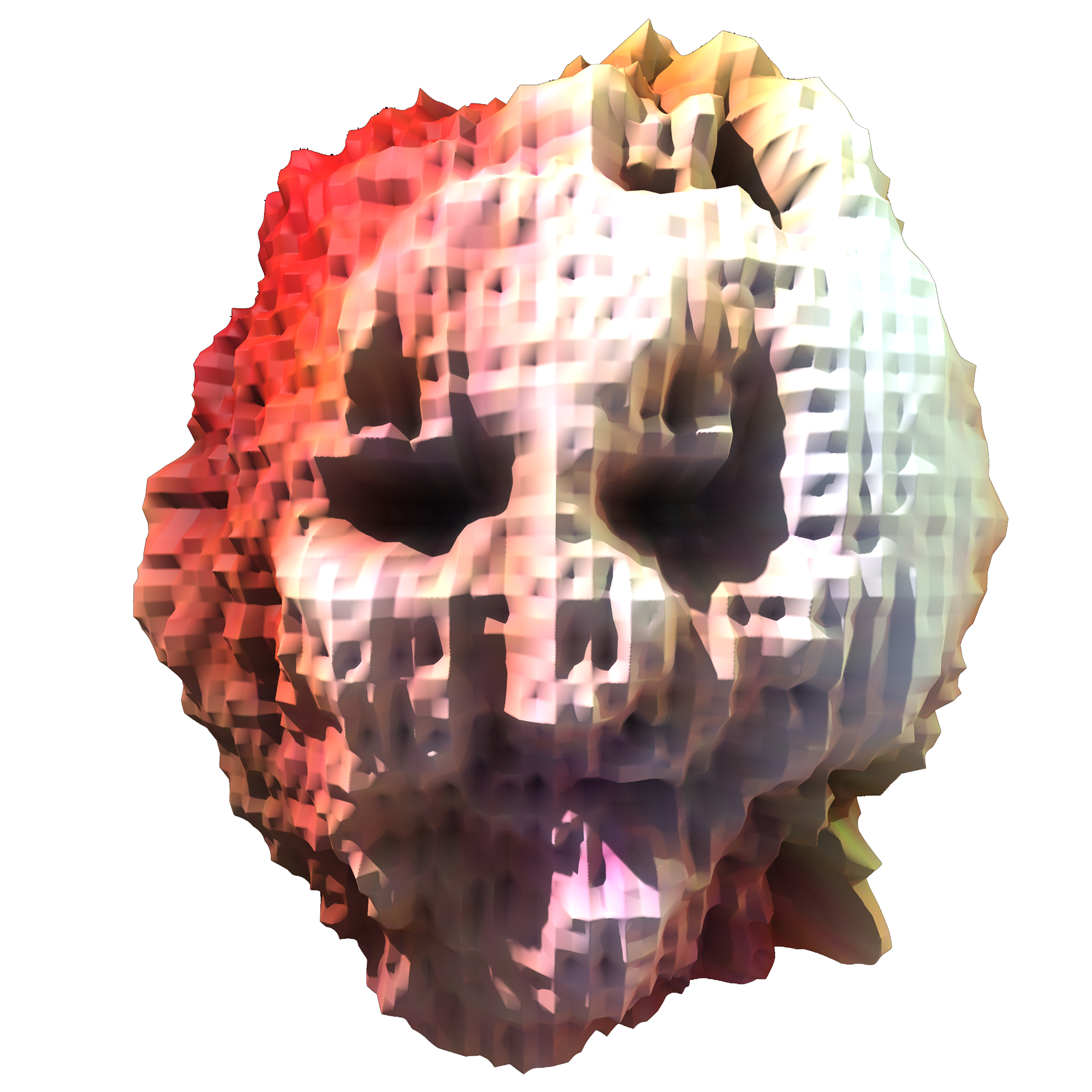

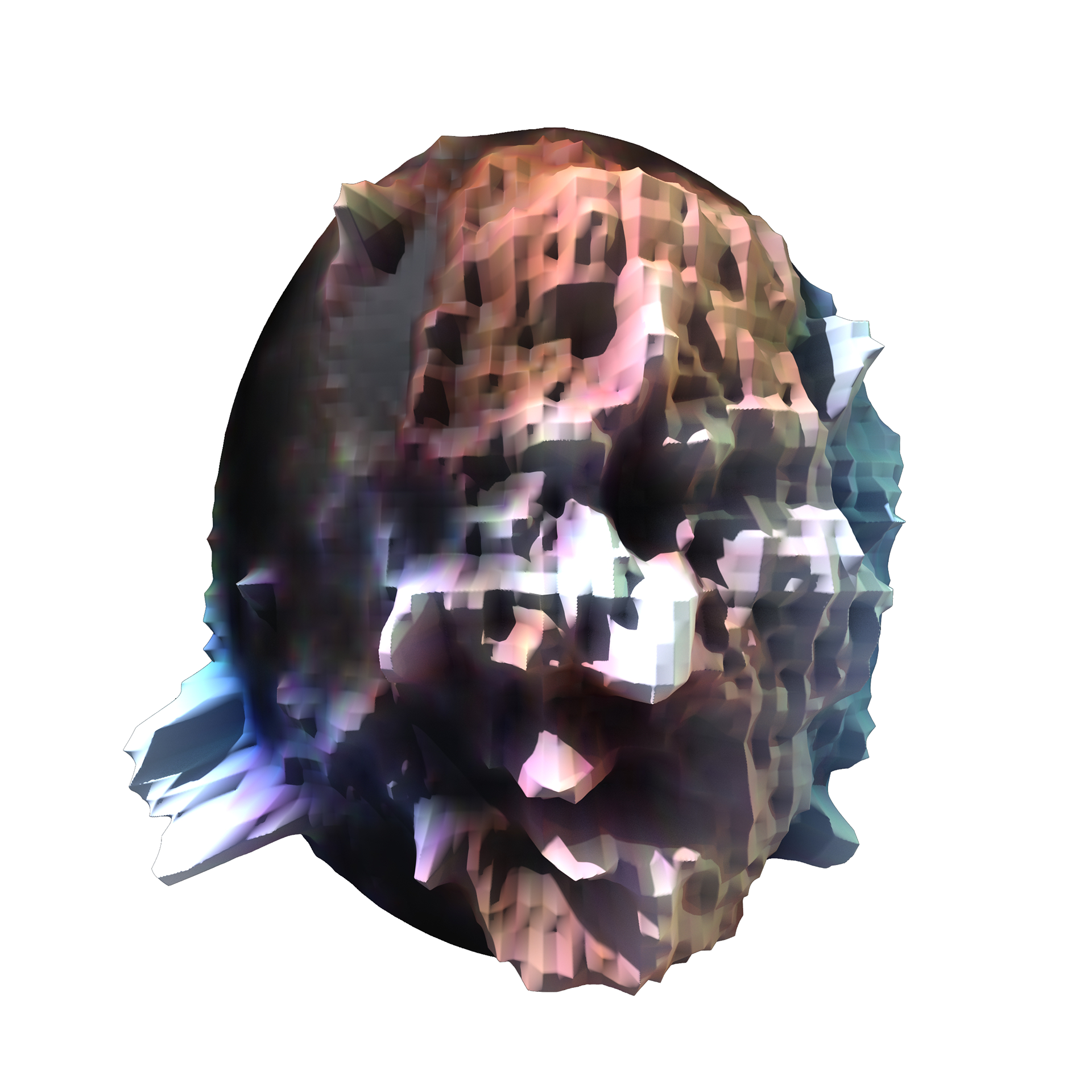

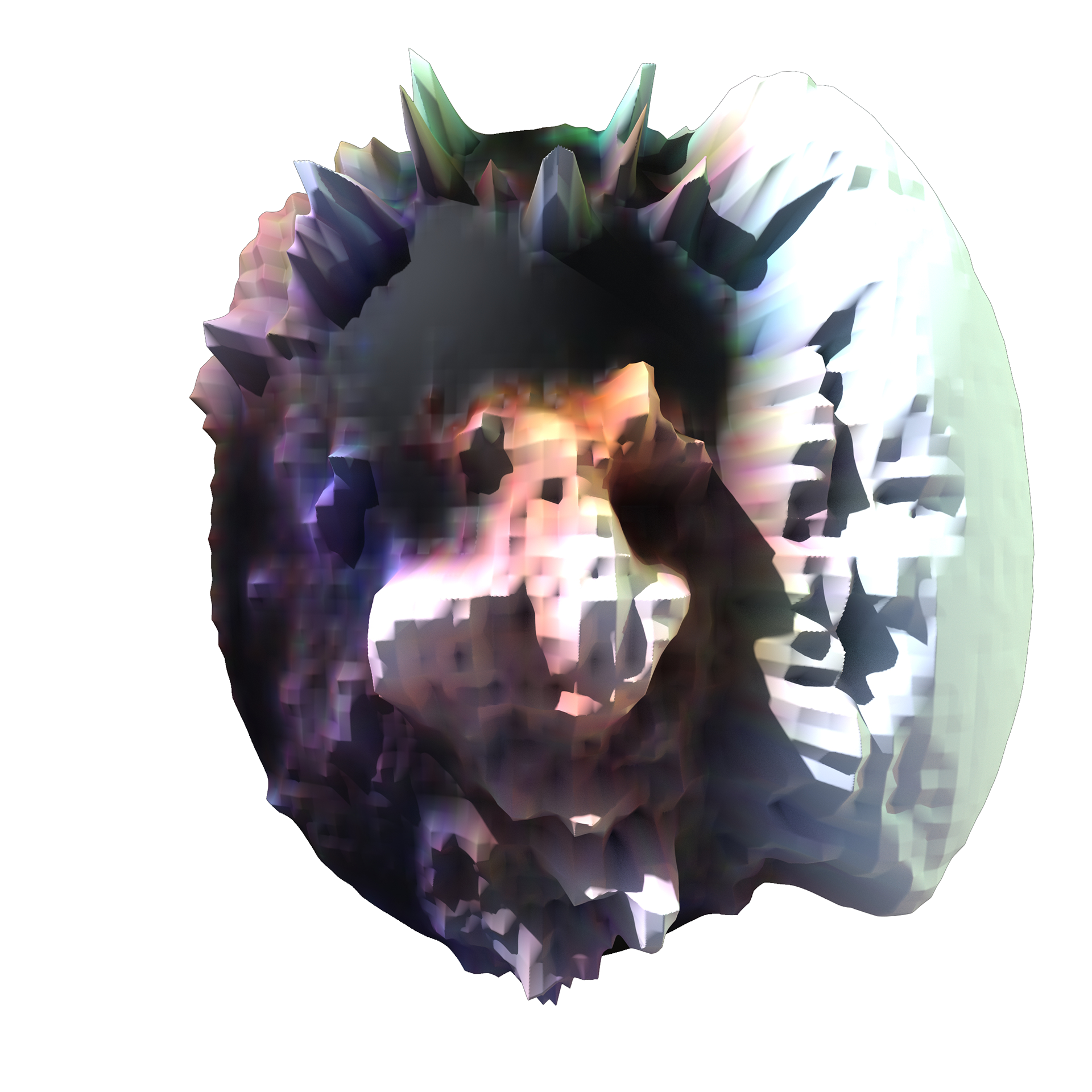

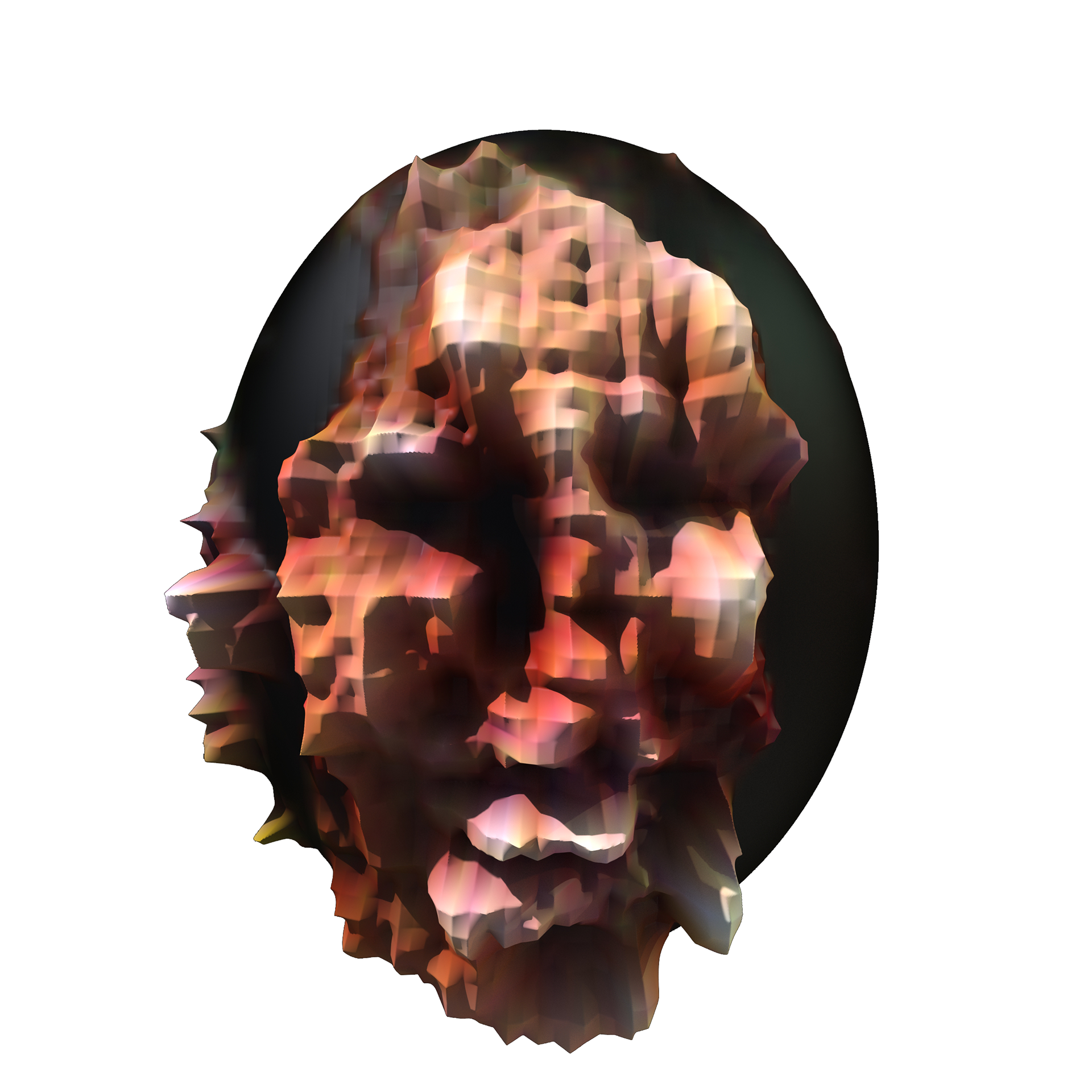

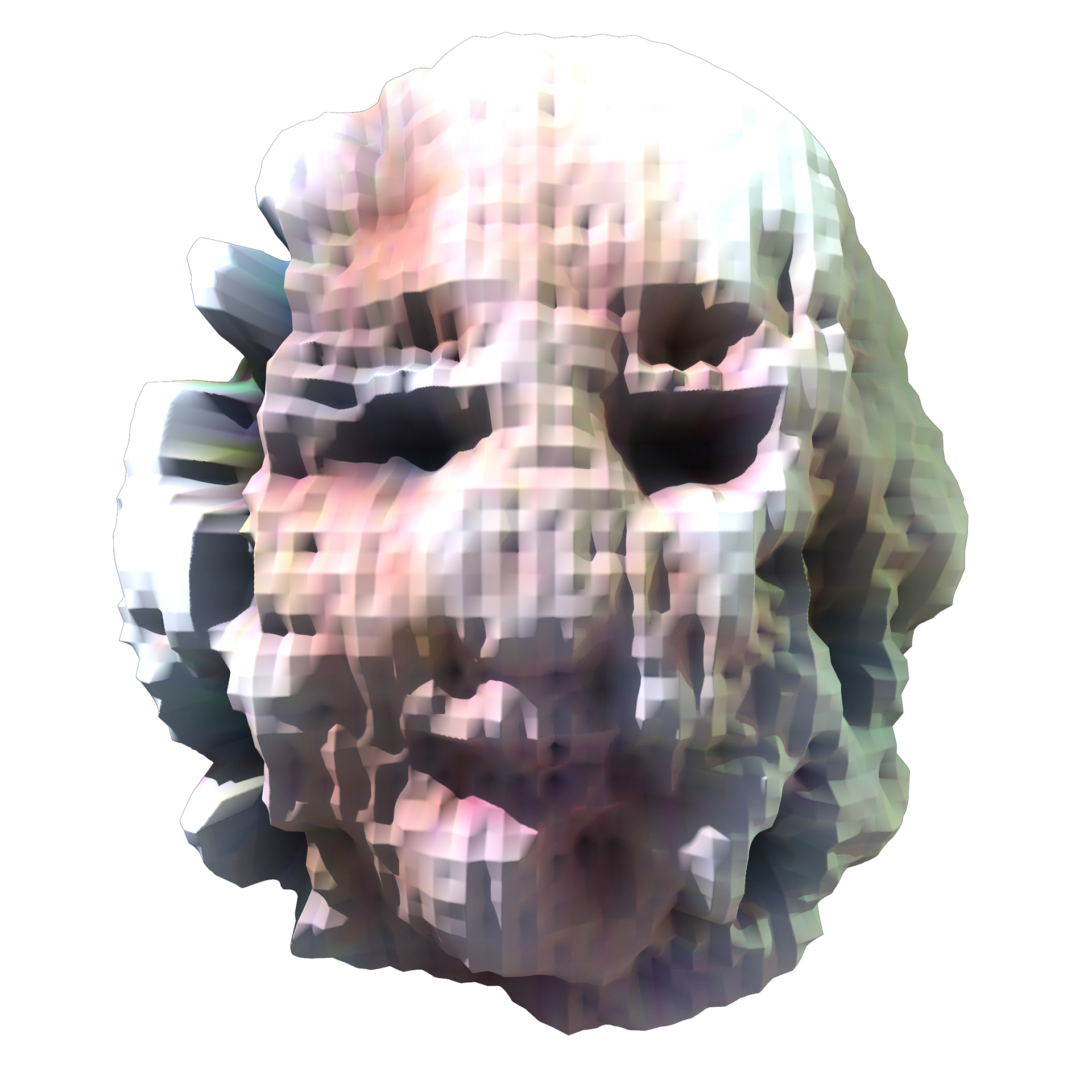

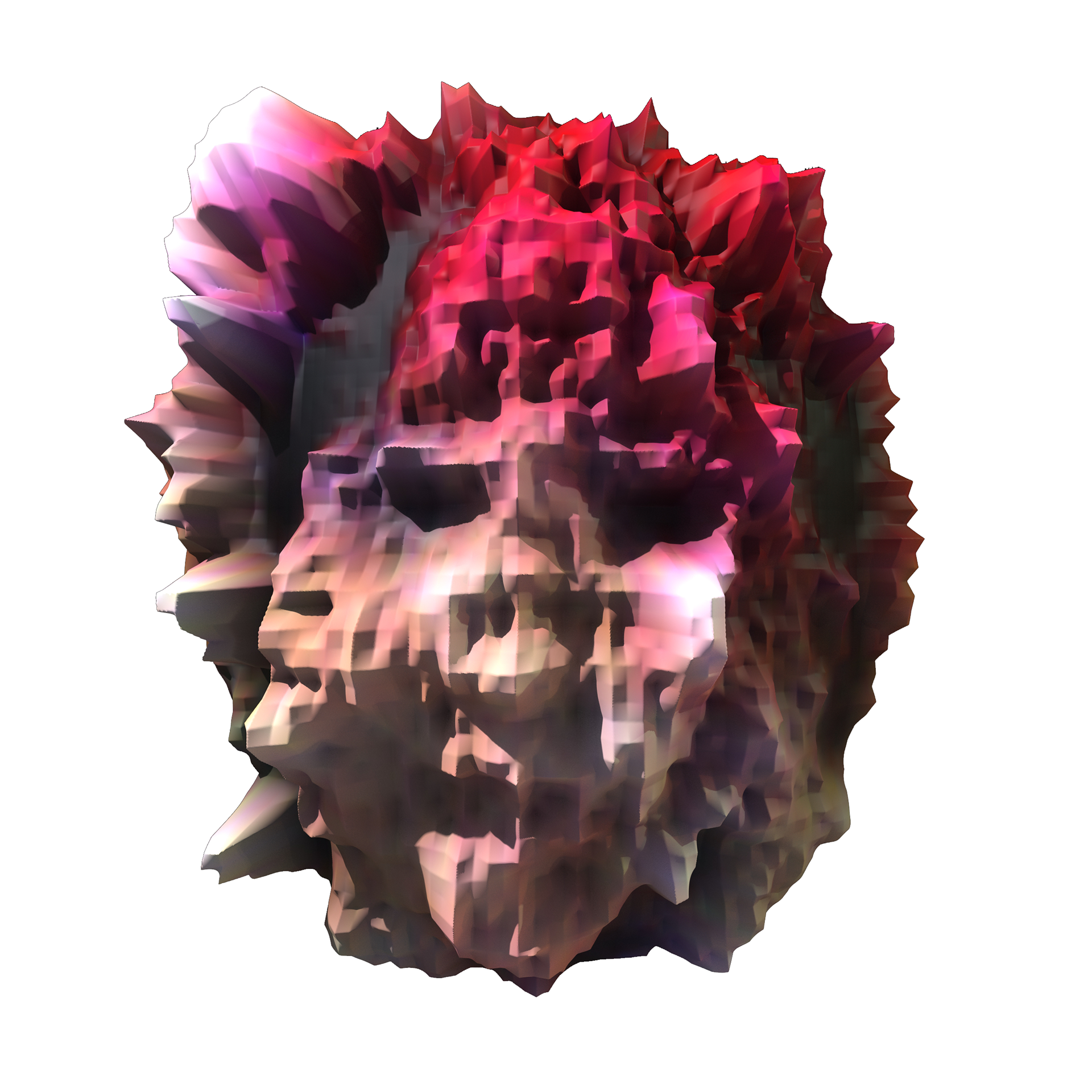

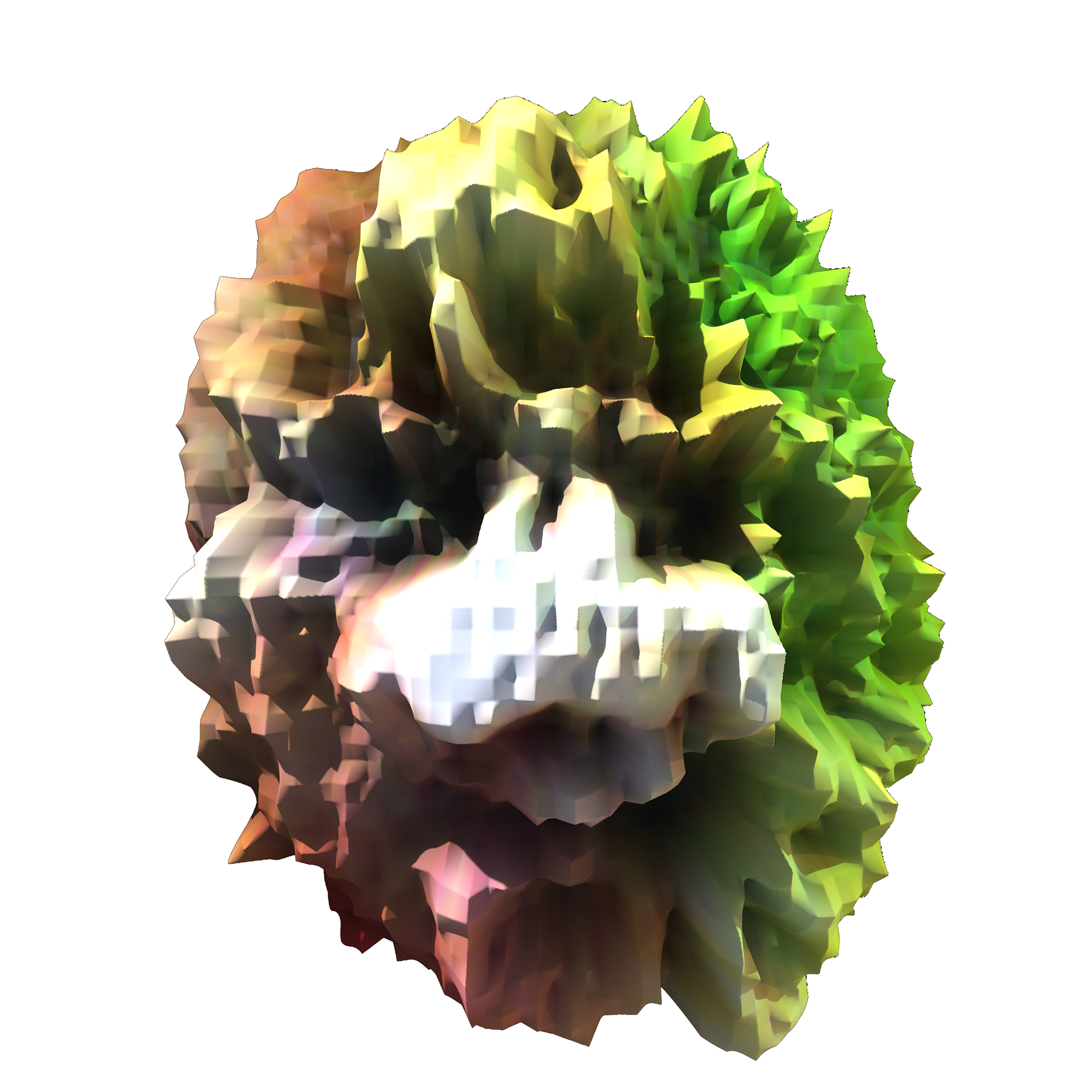

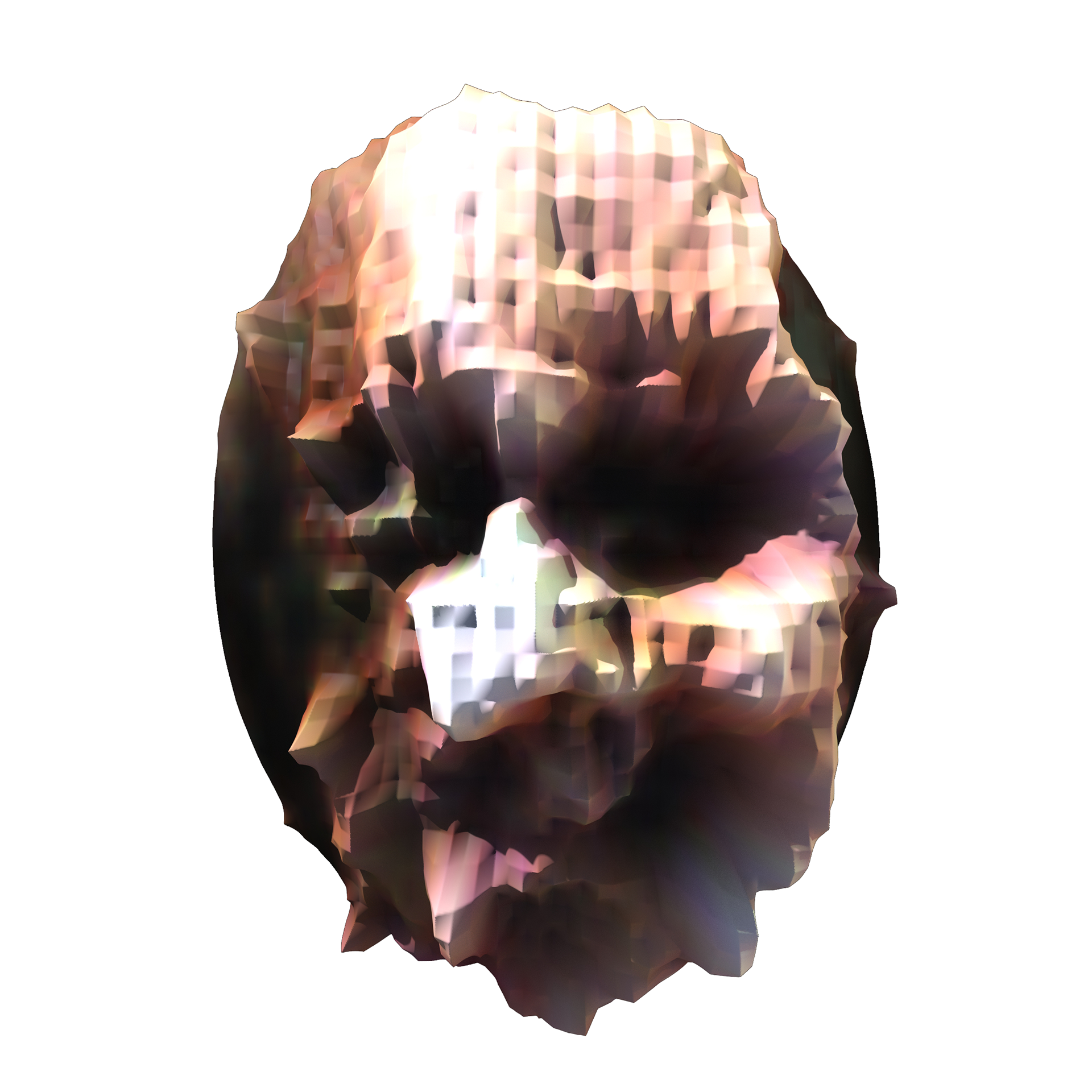

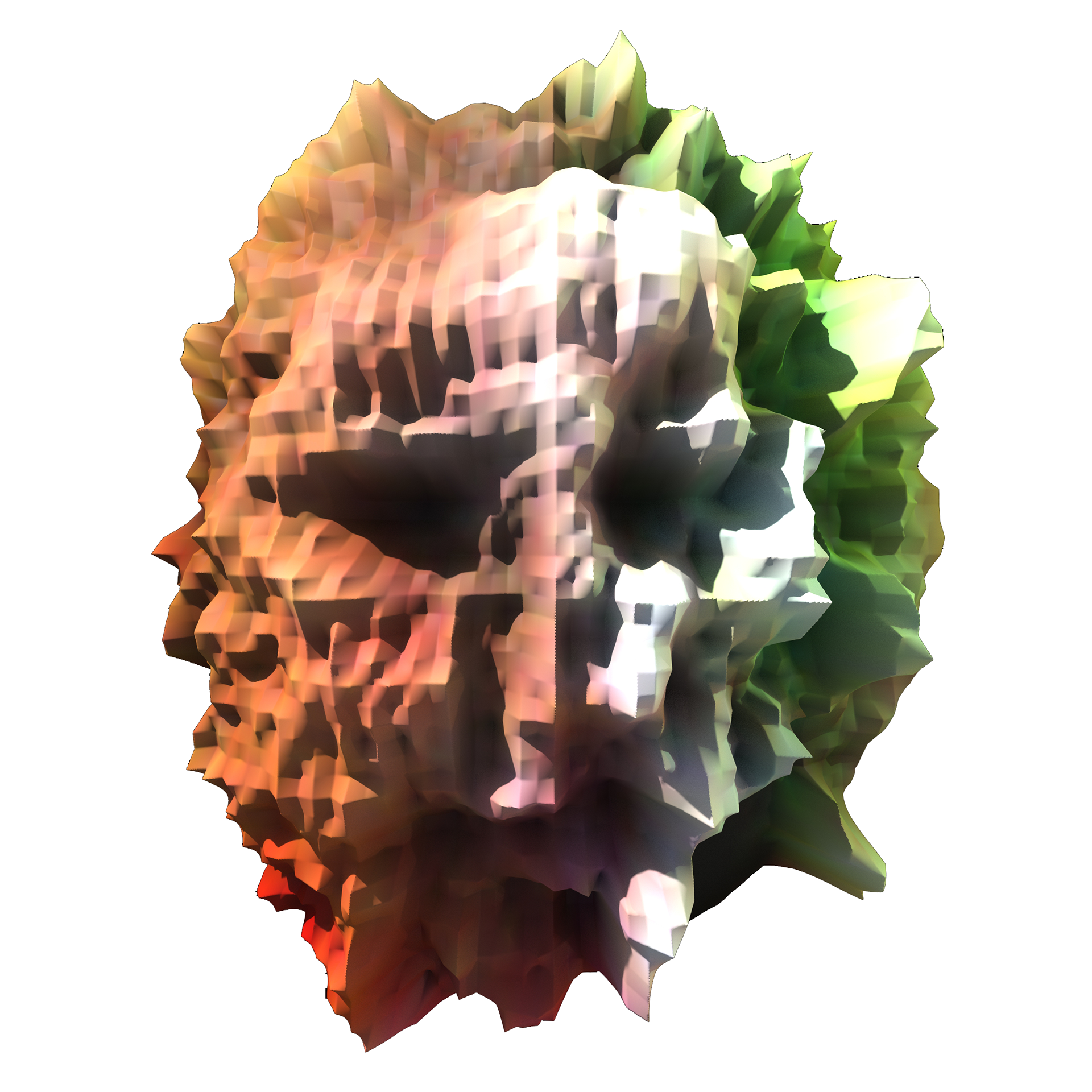

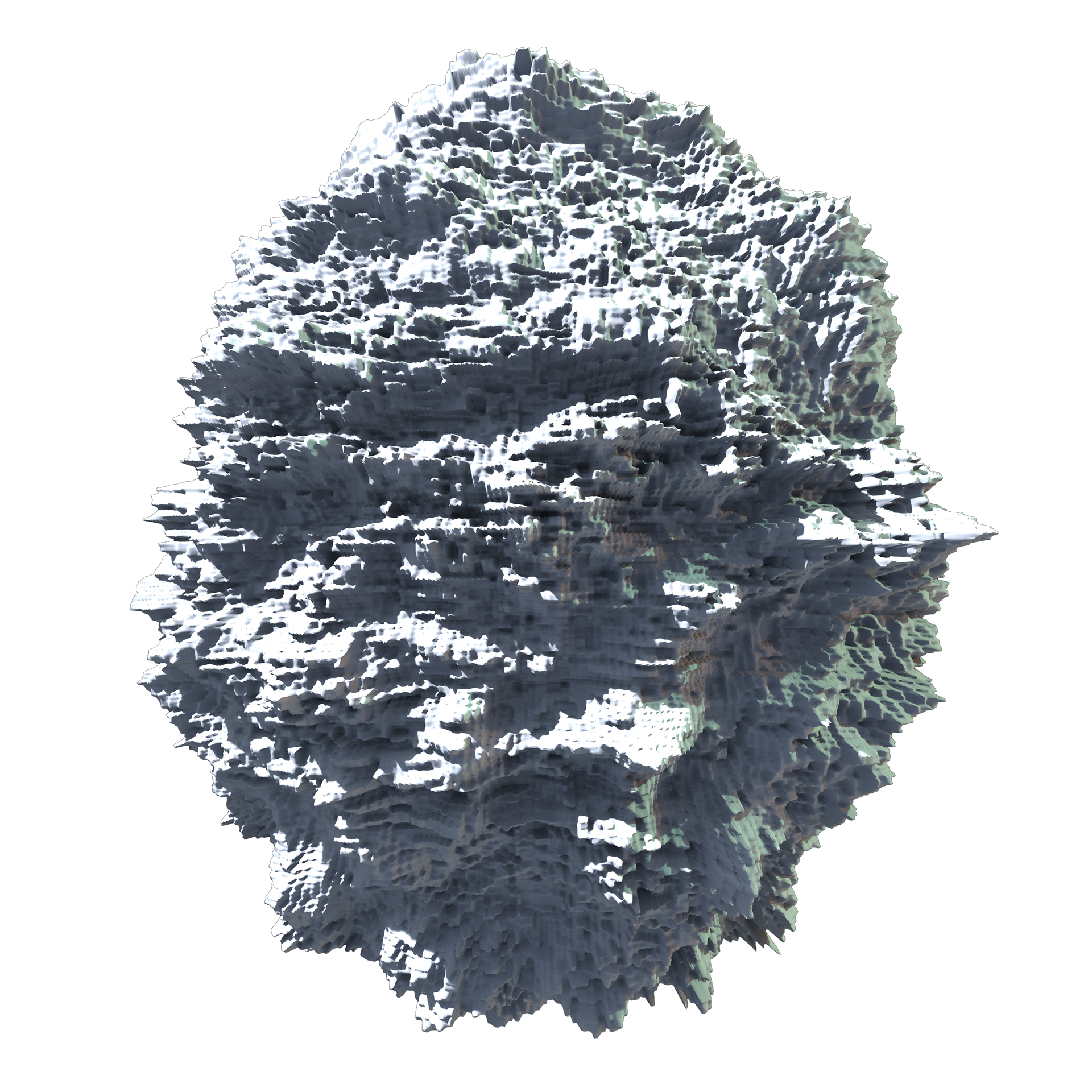

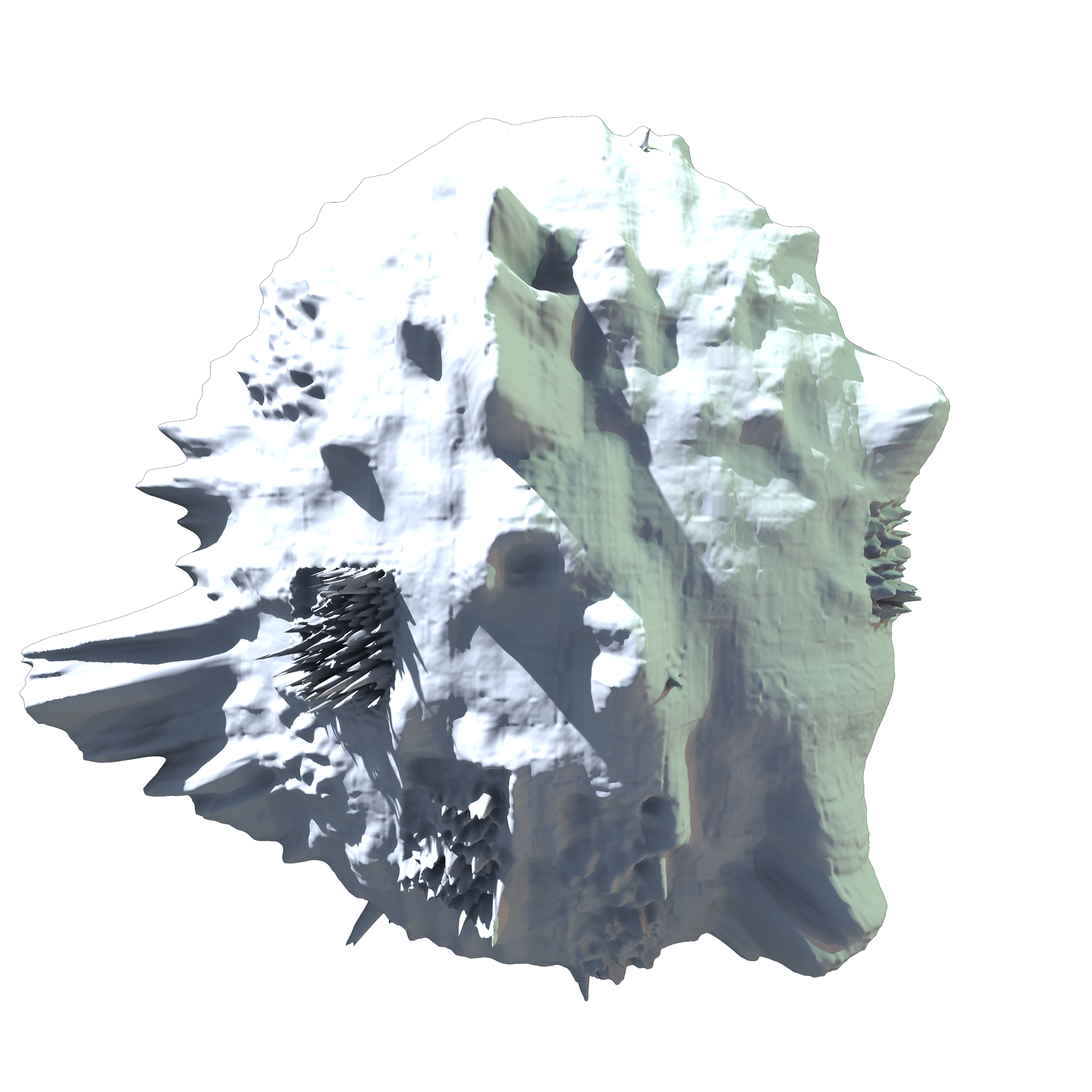

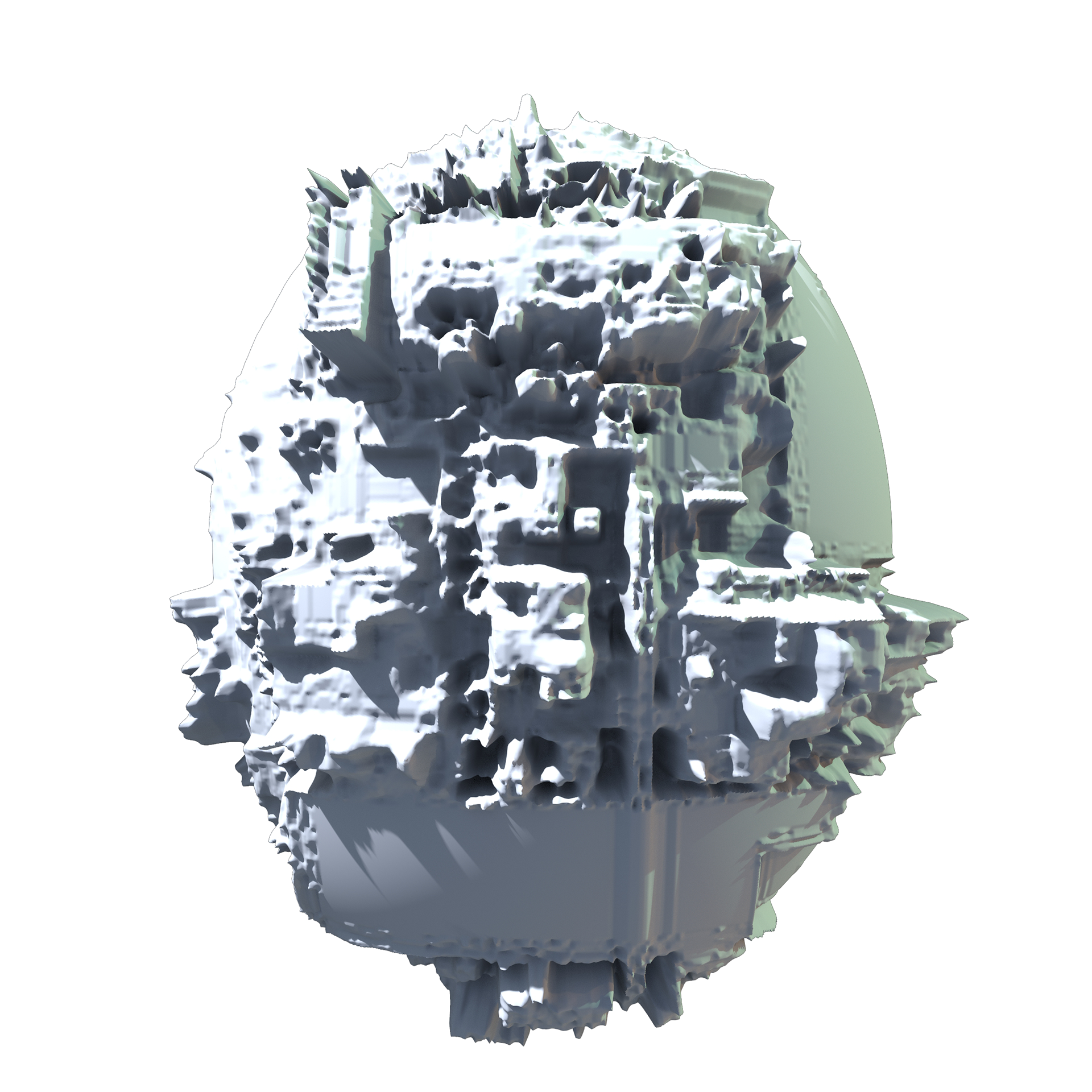

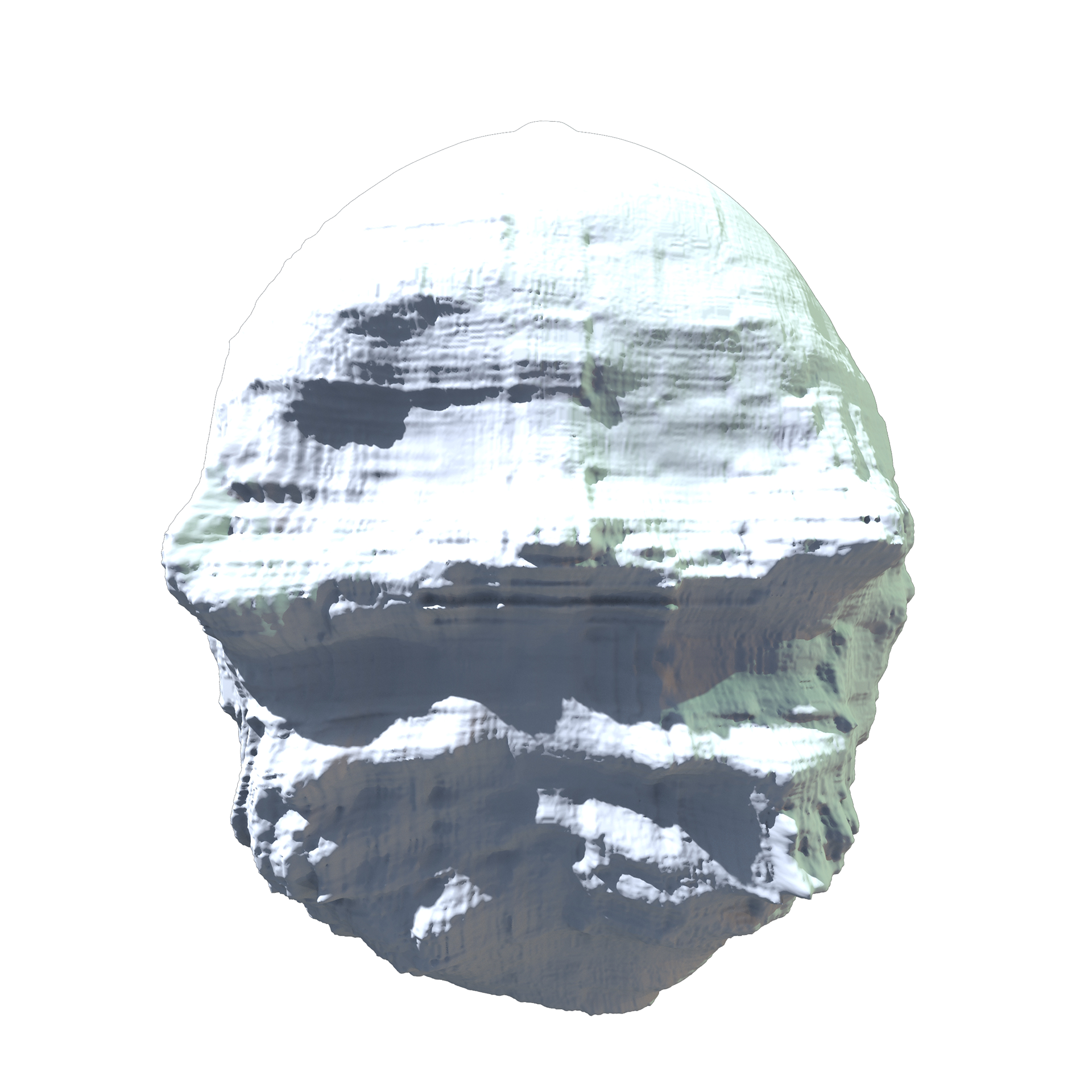

Using a generative adversarial network (GANs), a machine-learning algorithm, a computer is taught to imagine and generate human faces. Several 2D images of human faces assembled by computer-generated noise are extracted. The low-res 2D data is manually processed to generate heightmaps and fed to a base geometry, visualizing them in three-dimensional form.

Role

Designer, Researcher

Credits

Mentor // Marjan Colletti

Mentor // Javier Ruiz

Photographer // Paul Kohlhaussen

Mentor // Javier Ruiz

Photographer // Paul Kohlhaussen

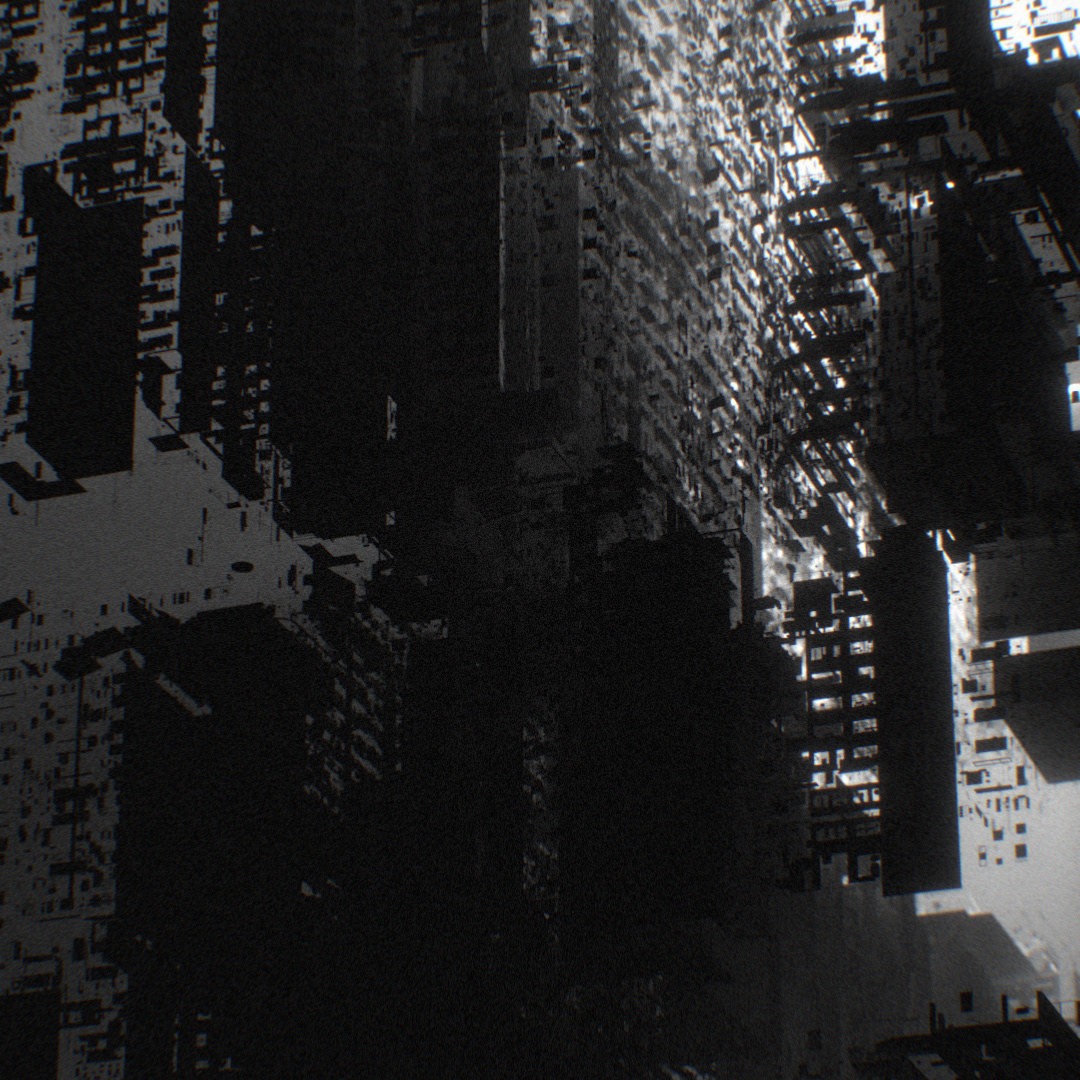

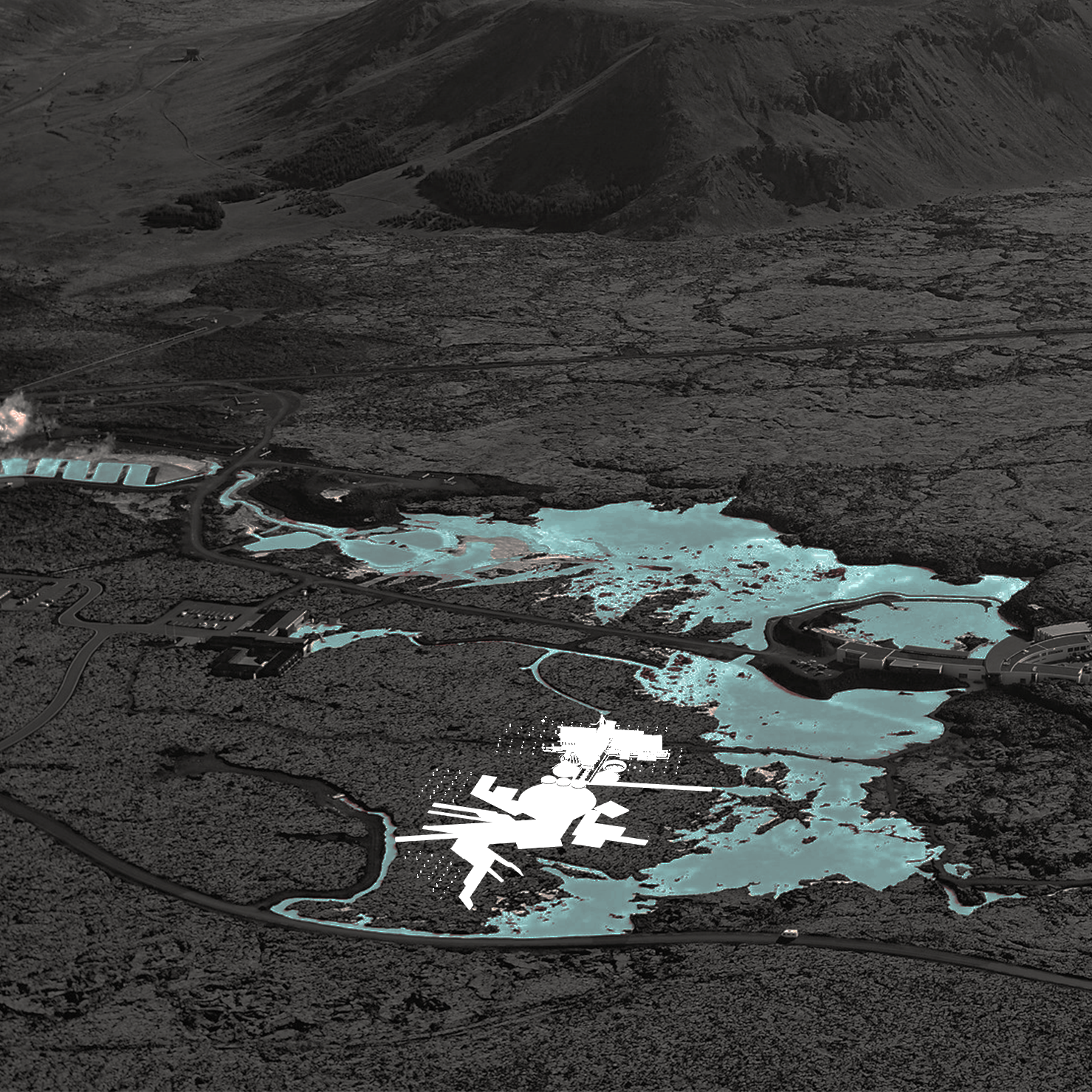

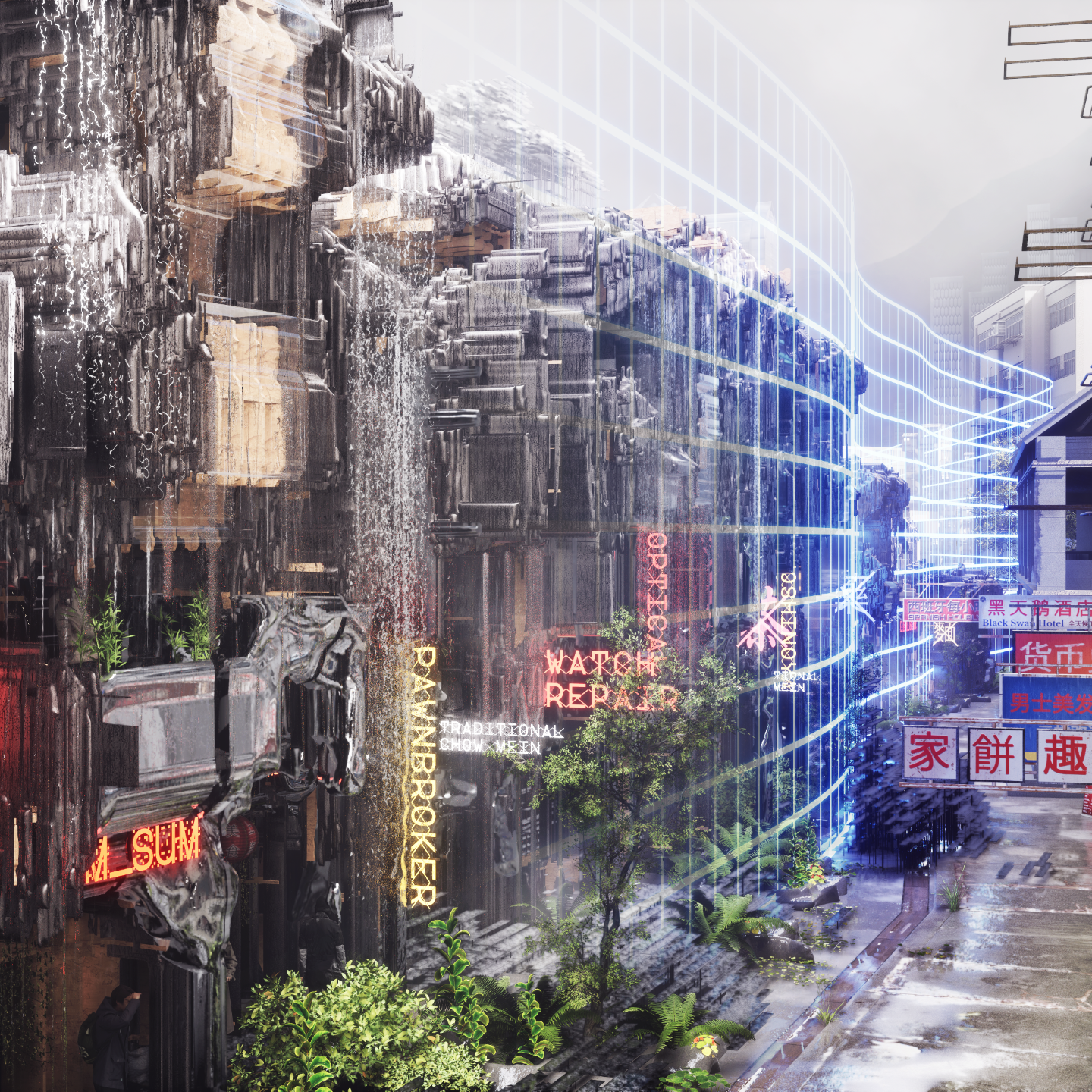

LR = 0.002 // Epoch = 008

LR = 0.002 // Epoch = 019

LR = 0.002 // Epoch = 120

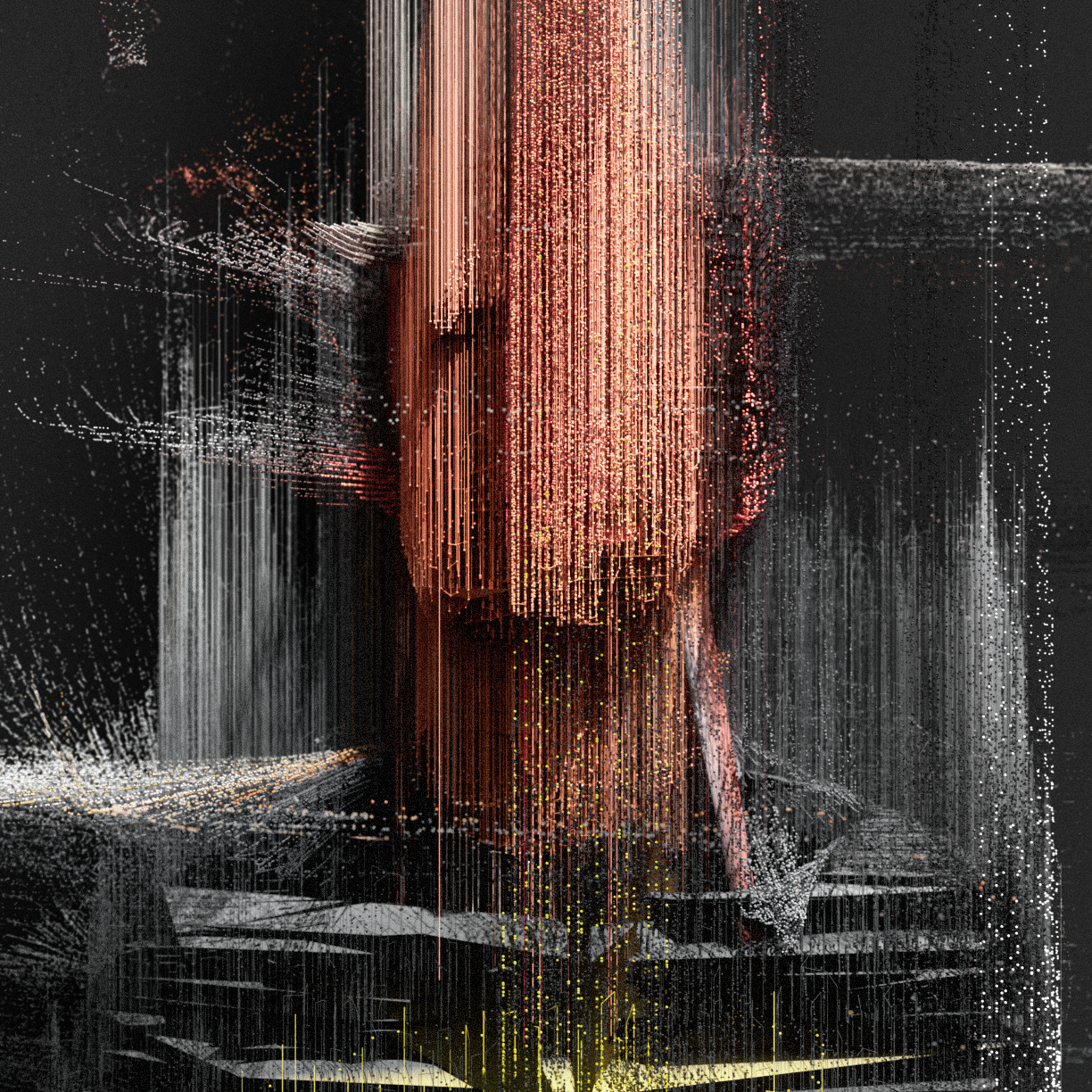

The results are 3D interpretations of machine language - ghost-like data faces that are not quite human, not quite machine: artefacts of in-betweenness. The physical manifestation of a dataface was made possible with 3D printing. The face renders the wearer as something between a human and a nonhuman when input back into the confused eyes of machine vision.